New. Demo. Reel.

Even if it’s not for “work”, I always end up playing with various CV-related techniques … its like art therapy. And every so often I’ll compile some recent therapy projects into a demo reel. But I do have one self-imposed rule for my demo reel … everything has to be made from complete scratch … I can use basic tools (OpenCV, Tensorflow) … and public datasets … but … absolutely nothing else, no pre-made models (even VGGX, Inception, etc), nothing. If I need a tool, I have to make it. If I need a model … gotta train it from scratch. Keeps things fun. Anyway, here’s my Summer 2020 Demo Reel … hope you enjoy!

Alternate links: ![]() https://youtu.be/BogqV5qnPFA

https://youtu.be/BogqV5qnPFA

![]() https://vimeo.com/409445782/d758ede0a8

https://vimeo.com/409445782/d758ede0a8

The video above has chapters built into the player, or click an image below to jump to a particular section

It bugs me when I see a CV technique and haven’t implemented it from absolute scratch … because otherwise you don’t really feel how stuff works. 🙂 And so I was way way overdue to implement some recent-ish image-to-pose techniques. Settled on the convolutional pose machine / part-affinity fields family of work (confidence-map-based), and the result is a nice little demo on a freestyle dance video.

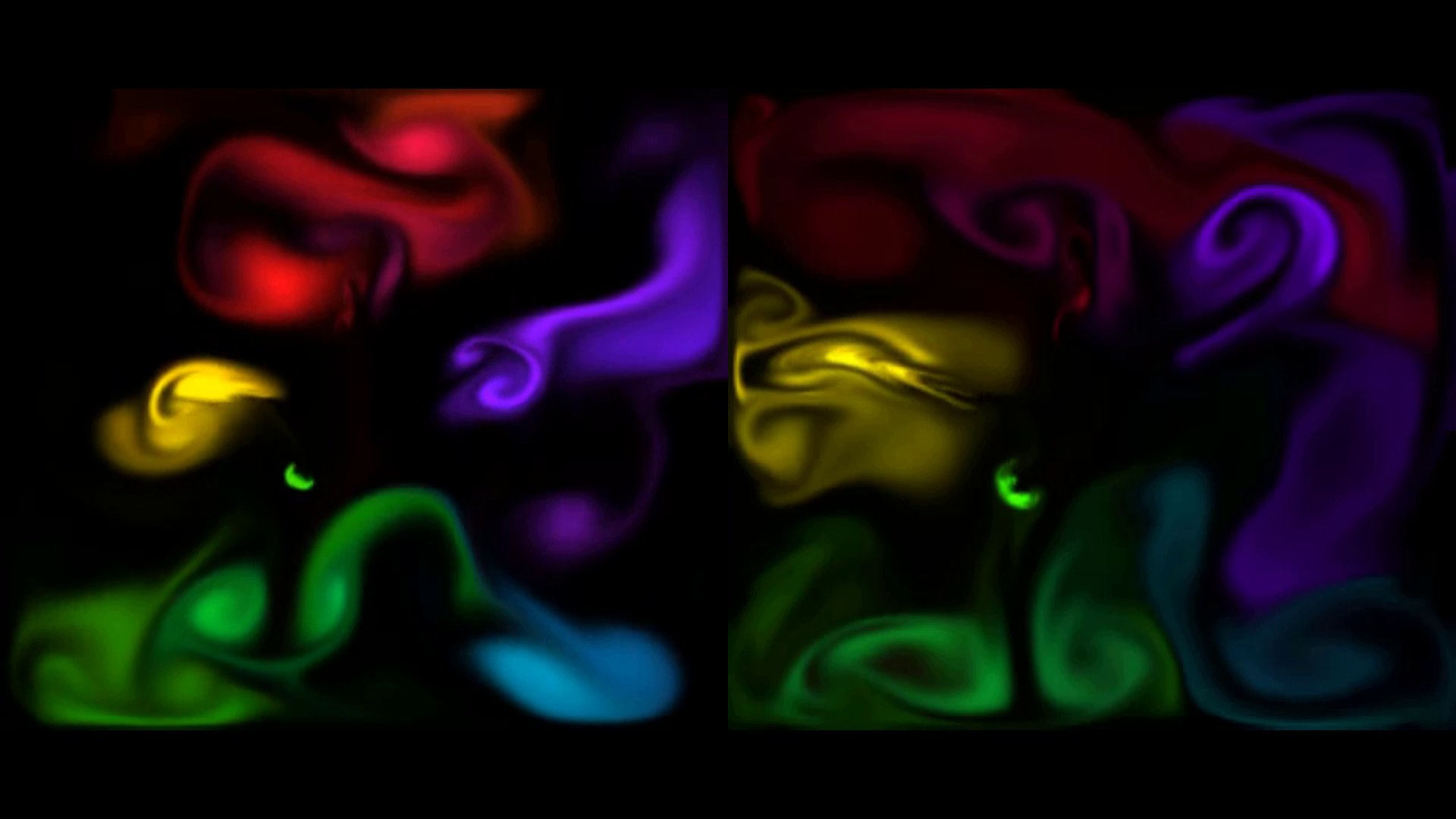

I‘ve always loved this particle-demo by Matthias Müller and wanted to make something using fluid/particles synced to music. Also was inspired by recent work using deep nets with fluid simulations … so this was the result, deep-nets animating Navier-Stokes fluids synced to music. Nothing crazy and didn’t really follow any paper here, just kind of made the techniques up as I went along until I liked it.

Yeah, this one is super boring. 🙂 I kind of hate segmentation/detection ’cause they’re so common … but I tried to make this somewhat interesting here by training only on the Oxford Pet Dataset and of course not using base networks like VGGX/Inception etc … so challenge was what kind of results could you get by only training on a limited dataset (with augmentation of course).

This is a pretty involved system I made for the last start-up I co-founded. It’s a system to perform line/edge based localization from a model in real-time (20+ FPS) on low-powered Android devices. The coolest part is the line-segment detector which runs entirely on the GPU at 40FPS (faster than the camera). This tech actually saw field use on real construction doing QA/QC with actual BIMS models.

This is from a project I did funded by the National Eye Institute designed to give visually impaired users a way to remotely read signs in their environment using very low-powered mobile devices. Actually got to hands-on demo this at a Blind Veterans Conference, which was definitely a career/life high for me.

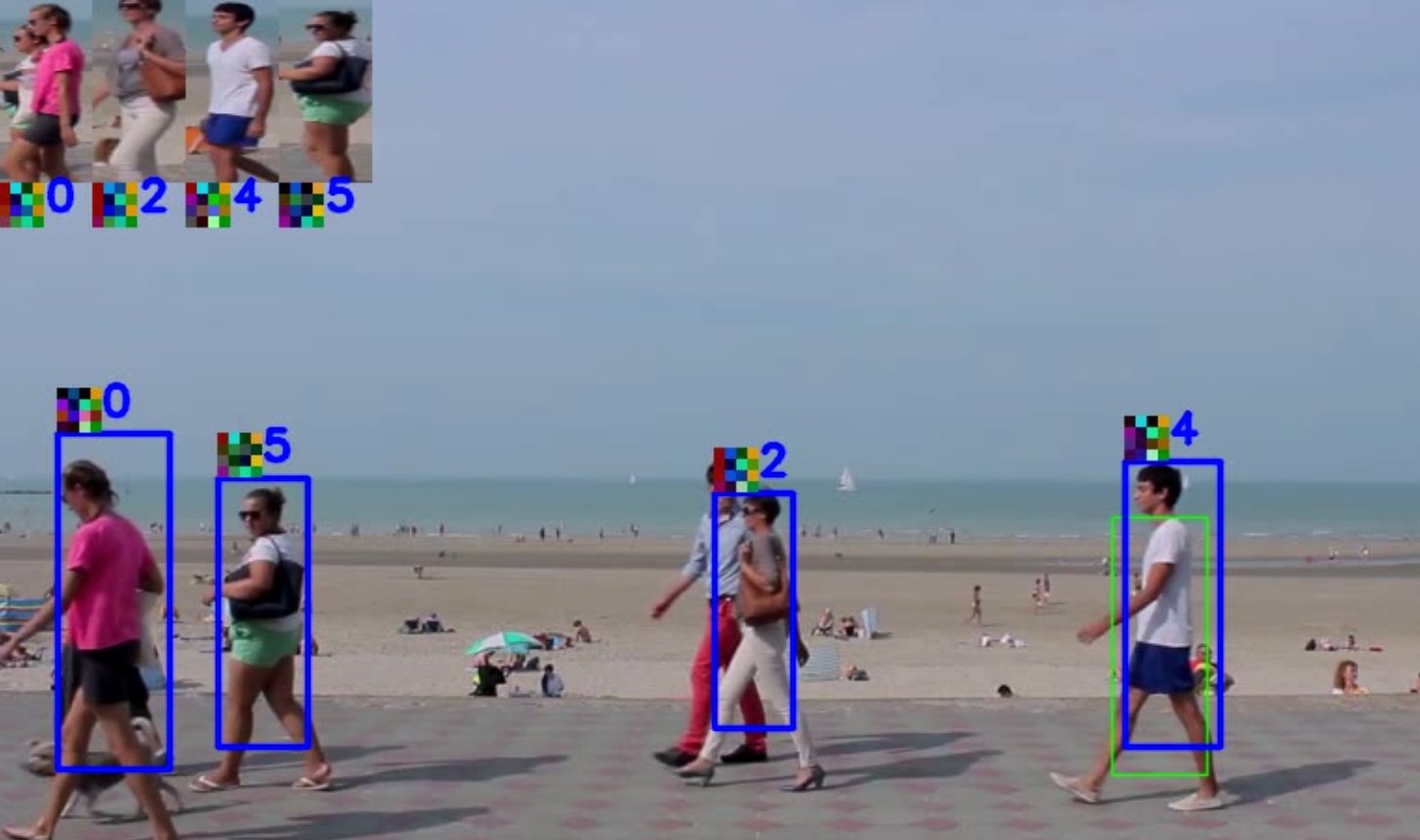

Standard stuff up there with detection and segmentation in the boring department. I did get to do something I always wanted to do though and create a color grid showing the network’s internal “one-shot” representation of the various pedestrians.

F.A.Q.

Q. What does your language/environment workflow look like?

I‘ve been doing various types of work in the computer vision field for well over a decade … and … during that time I’ve worked in most environments and most languages you might care to imagine. So my answer would be “whatever it takes to get the job done“.

Generally though, my language/tool flow is chosen to support fast-iteration. I also have a golden rule: “always be ready to demo” that guides most of my development decisions. I like to have something to show as fast as possible, and then rapidly iterate improvements.

So, I usually spend a bit of time looking at the project data in MATLAB first … just understanding how the data feels, and I’ll prototype anything I can there. Then’ll take it over to Python to build any data processing tools I need (if I’m training a network for example) and make some further proof of concepts. If it’s a project involving deep networks, I’ll definitely use Python/TF/Keras and quickly prototype some network architectures on small datasets, even if they perform terribly at first … then try different things to improve performance. For demo-reel-level stuff, I may just stop at Python but when I’m doing a job I’ll generally end up coding the final work in C++ (or Swift or Kotlin if it’s a mobile app) … and taking any models I can to TFLite. Then, for production work, I’ll usually end up spending a lot of time in a performance profiler looking to cut about 25%-50% off the execution time with … common optimizations, memory layouts, data formats, etc.

Q. What’s with the rabbit?

Some version of a “fire rabbit” is my symbol/joke for how humans have always had a love affair with artificial intelligence. I use zestyfirehare (thus the fire rabbit) as a pseudonym sometimes … zestyfirehare being an anagram for Sefer Yetzirah … which in turn is related to golems … which I have a fascination with. Because, as an artificial intelligence guy, when I hear about the concept of a “golem” across various historical narratives: clearly a man-made thing, can move around on its own, performs tasks for its creators, can learn … well that’s … A.I. right there. And when you view it like that … wow, apparently humans have been itching to make strong artificial intelligence for a very long time. So that’s the deal with the (fire) rabbit. 🙂

Q. Do you have any flowery Silicon Valley nonsense statement about your mission?

I used to say that I like to give users “Any sufficiently advanced technology is indistinguishable from magic” moments. But that got Silicon Valley-types way too excited for the wrong reasons, so more recently I’ve been saying that I like to “entertain people with math” … which is closer to what I actually mean. 🙂

I don’t consider any technology “advanced” or “magical” … but I really, really, really enjoy that stunned/amazed smile on a user’s face when they see some tech work that they didn’t think was possible before.

As a result, I rarely work on a problem for a problem’s sake … for me I have to be able to delight/entertain somebody with the solution for a problem to be interesting. Haha … thinking about it I guess the one exception is my demo reel work … but then … that brings a smile to my face so there you go. 🙂

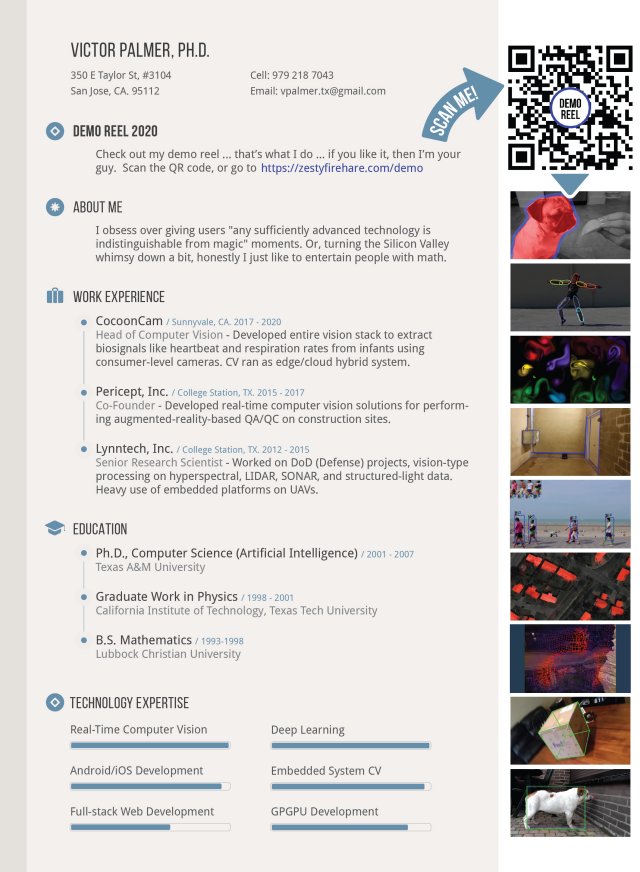

Resume

My demo reel is pretty much my resume, but if you want a PDF … there you go!